I am using face_detector to detect human. I work quite well I can detect human and visualize on Rviz.

However, I would like to know that is it possible to transform detected human position from camera_frame to odom frame?

I am using pepper. Its frame view is

odom >> base_link >> torso >> neck >> head >> CameraTop_frame >> CameraTop_optical_frame

Can I use `

↧

Transform human pose from camera_frame to Odom frame

↧

tf invalid argument

Hi,

I'm trying to follow [this](https://github.com/ros-industrial/industrial_training/wiki/Coordinate-Transforms-using-TF) tutorial, and am running into an error. The error is

[ERROR] [1497197044.658735156]: Exception thrown while processing service call: Invalid argument passed to lookupTransform argument target_frame in tf2 frame_ids cannot be empty

[ERROR] [1497197044.663532989]: Service call failed: service [/localize_part] responded with an error: Invalid argument passed to lookupTransform argument target_frame in tf2 frame_ids cannot be empty

The code I am running is:

bool localizePart(myworkcell_core::LocalizePart::Request& req,

myworkcell_core::LocalizePart::Response& res)

{

// Read last message

fake_ar_publisher::ARMarkerConstPtr p = last_msg_;

if (!p) return false;

res.pose = p->pose.pose;

tf::Transform cam_to_target;

tf::poseMsgToTF(p->pose.pose, cam_to_target);

tf::StampedTransform req_to_cam;

listener_.lookupTransform(req.base_frame, p->header.frame_id, ros::Time(0), req_to_cam);

req_to_target = req_to_cam * cam_to_target;

tf::poseTFToMsg(req_to_target, res.pose);

return true;

}

From what I've seen, this error implies one of my arguments in the lookupTransforms function is not initialized. I'm not sure how to fix this, any help will be appreciated.

----------

/*

* vision_node.cpp

*

* Created on: May 9, 2017

* Author: donni

*/

/**

* Simple ROS node

*/

#include

#include

#include

#include

class Localizer

{

public:

//Here we are defining the variables which are used in the Localizer class

ros::ServiceServer server_;

ros::Subscriber ar_sub_;

fake_ar_publisher::ARMarkerConstPtr last_msg_;

tf::TransformListener listener_;

tf::Transform req_to_target;

Localizer(ros::NodeHandle& nh) // This is the constructor

{

ar_sub_ = nh.subscribe("ar_pose_marker", 1,&Localizer::visionCallback, this);

server_ = nh.advertiseService("localize_part", &Localizer::localizePart, this);

}

void visionCallback(const fake_ar_publisher::ARMarkerConstPtr& msg)

{

last_msg_ = msg;

// ROS_INFO_STREAM(last_msg_->pose.pose);

}

bool localizePart(myworkcell_core::LocalizePart::Request& req,

myworkcell_core::LocalizePart::Response& res)

{

// Read last message

fake_ar_publisher::ARMarkerConstPtr p = last_msg_;

if (!p) return false;

res.pose = p->pose.pose;

tf::Transform cam_to_target;

tf::poseMsgToTF(p->pose.pose, cam_to_target);

tf::StampedTransform req_to_cam;

listener_.lookupTransform(req.base_frame, p->header.frame_id, ros::Time(0), req_to_cam);

req_to_target = req_to_cam * cam_to_target;

tf::poseTFToMsg(req_to_target, res.pose);

return true;

}

};

int main(int argc, char* argv[])

{

// This must be called before anything else ROS-related

ros::init(argc, argv, "vision_node");

// Create a ROS node handle

ros::NodeHandle nh;

ROS_INFO("Hello, World!");

// The Localizer class provides this node's ROS interfaces

Localizer localizer(nh);

ROS_INFO("Vision node starting");

// Don't exit the program.

ros::spin();

}

↧

↧

what does this function in hector mapping mean?

Hi, all, I am learning the source code of [hector mapping](https://github.com/tu-darmstadt-ros-pkg/hector_slam). I have a question about a function in [../hector_slam_lib/map/OccGridMapUtil.h](https://github.com/tu-darmstadt-ros-pkg/hector_slam/blob/catkin/hector_mapping/include/hector_slam_lib/map/OccGridMapUtil.h#L349)

Eigen::Affine2f getTransformForState(const Eigen::Vector3f& transVector) const

{

return Eigen::Translation2f(transVector[0], transVector[1]) * Eigen::Rotation2Df(transVector[2]);

}

By studying the source code, I know that the input parameter 'transVector' is the last ScanMatchPose (also the robot's last pose?) in map frame (or map coordinate system, the last ScanMatchPose in world frame has been transformed into map frame by **[GridMapBase::getMapCoordsPose](https://github.com/tu-darmstadt-ros-pkg/hector_slam/blob/catkin/hector_mapping/include/hector_slam_lib/map/GridMapBase.h#L235)**).

The return value is a transform with Eigen::Affine2f type, this value will be used in [OccGridMapUtil::getCompleteHessianDerivs](https://github.com/tu-darmstadt-ros-pkg/hector_slam/blob/catkin/hector_mapping/include/hector_slam_lib/map/OccGridMapUtil.h#L64)

to transfrom a point (currPoint in code). The currPoint's value depends on the level of the map, it has been computed by [DataPointContainer::setFrom](https://github.com/tu-darmstadt-ros-pkg/hector_slam/blob/catkin/hector_mapping/include/hector_slam_lib/scan/DataPointContainer.h#L46) from the original laser scan data.

Does anyone can tell me what is this function used for? What is the underlying mathematcial theory ?

Thank you!

@Stefan Kohlbrecher could you give me an answer? Thanks!

**EDIT**

According the API document on Eigen,

[http://eigen.tuxfamily.org/dox/classEigen_1_1Translation.html#a14863add291014cebe115c5d66f64497](http://eigen.tuxfamily.org/dox/classEigen_1_1Translation.html#a14863add291014cebe115c5d66f64497)

It is "Concatenates a translation and a rotation". Ok, but why the return variable type is a Affine2f.

Is a Affine2f a 3X3 matrix? and is a Affine2f a isometric transformation type?

Thanks!~

↧

Camera Pose Calibration

Is there an equivalent package to **camera pose calibration** in ROS Hydro? Finding the exact transform connecting the frame of a new camera to an existing robot's **tf** tree is almost impossible ...

↧

Can tf/tf2 resolve "hidden transforms" ?

Howdy folks,

I have question relating to the following transform tree:

**[/nav_frame]->[/base_link]->[/sensor_platform] -> [/IMU_AHRS]**

The sensor platform is literally a platform that has sensors mounted on it (GPS antenna, IMU-AHRS, etc.) and is attached to the robotic platform (/base_link).

The nav_frame is a local ENU frame in which I do my navigation computations: it's placed on the WGS84 ellipsoid at the same location as the base_link, and it always points north.

I got an IMU-AHRS system (with magnetometer and world magnetic map) mounted on the sensor_platform that I will use to resolve the transformation between nav_frame and base_link.

So, I have the following situation:

[/nav_frame]->[/base_link] UNKNOWN, this is what I need to resolve

[/base_link] ->[/sensor_platform] is a known and fixed transform

[/sensor_platform] -> [/IMU_AHRS] is a known and fixed transform

**My Question: Can tf (or tf2) somehow automatically (internally) resolve the hidden transform [/nav_frame]->[base_link] when it's given the measured transform [/nav_frame] -> [/IMU_AHRS] as produced by the IMU-AHRS system, or do I (as the programmer) have to explicitly compute this transform (which is easy enough using the IMU-AHRS measurements and the fixed transforms relating the IMU_AHRS frame w.r.t. the base_link) and explicitly publish this as the [/nave_frame] -> [/base_link] transform?**

if tf/tf2 can do this "internally", then how?

Thanks in advance

Best regards,

Galto

↧

↧

Transforming a pointcloud2 in python

I've searched and searched, but can't find a good answer to this. I'm using python so don't have access to `pcl_ros` (which seems deprecated anyway?) and other answers are all for older versions of ROS (I'm using kinetic). The `tf2_sensor_msgs` looks like it's supposed to do it, but I can't import it directly (no module found). I'm using the `tf2_ros` package with a Transform buffer and listener.

self.transform_buffer = tf2.Buffer()

self.transform_listener = tf2.TransformListener(self.transform_buffer)

# Later I try:

pointcloud = self.transform_buffer.transform(pointcloud, 'base_link')

....

File "/home/zac/mbot/ros/src/mbot_api/src/web_server.py", line 266, in get_laserscan

pointcloud = ros_thread.get_velodyne_points_for_time(requested_ts)

File "/home/zac/mbot/ros/src/mbot_api/src/web_server.py", line 135, in get_velodyne_points_for_time

pointcloud = self.transform_buffer.transform(pointcloud, 'base_link')

File "/opt/ros/kinetic/lib/python2.7/dist-packages/tf2_ros/buffer_interface.py", line 63, in transform

do_transform = self.registration.get(type(object_stamped))

File "/opt/ros/kinetic/lib/python2.7/dist-packages/tf2_ros/buffer_interface.py", line 202, in get

raise TypeException('Type %s if not loaded or supported'% str(key))

TypeException

I'm receiving a PointCloud2 message in the /velodyne frame and I want to transform it into the /base_link frame.

↧

How to transform the whole /tf frame?

Given I have an occupancy grid map which I would want to transform with a certain [tx,ty,theta] transformation. How do I do it? The tf tree is posted below and also the rviz which contains the grid map. My ultimate goal is that I would have two occupancy grid maps which I would have to apply a transformation to either one of them so that they can be overlapped -- Map Merging. I'm looking for the mechanism in ROS where I can transform the map, particularly the whole /tf frame so that even the robot's pose will be transformed into a new coordinate frame.

http://postimg.org/image/d4xd3d39p/

http://postimg.org/image/vrdqce6x9/

↧

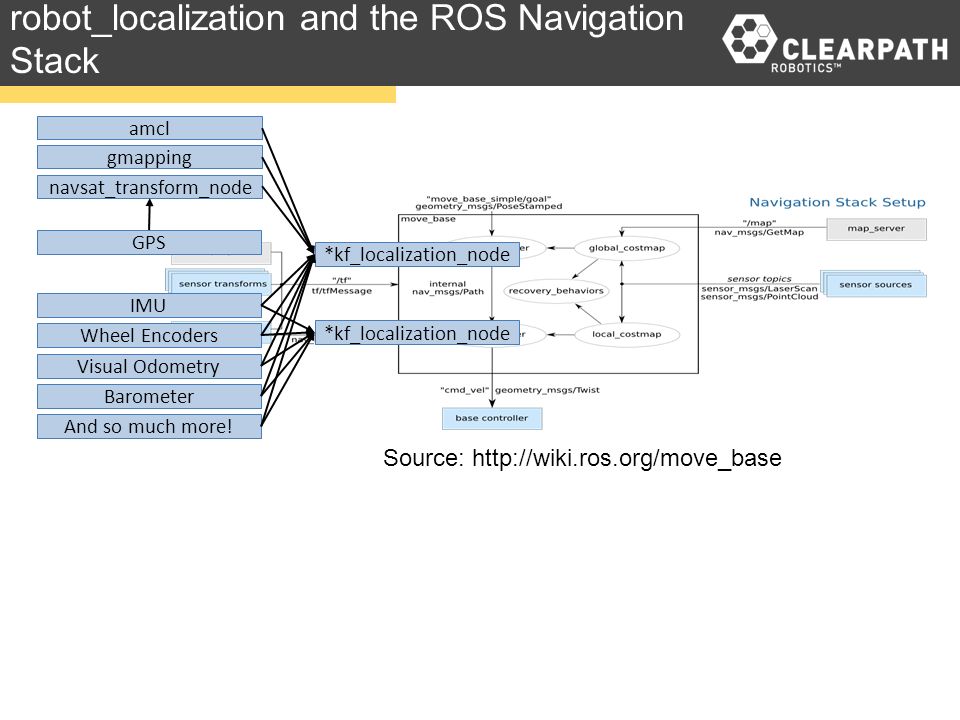

robot_localization and gmapping - how the transform should be done?

We are trying to use `robot_localization` and `gmapping`. What we have done so far: we use gmapping to publish the transform between `map` -> `odom`. Then we tried to apply gps, imu and odom using ekf from robot_localization. We use navsat_transform and one ekf node to fuse all the data. This node does `odom` -> `base_link` transform. This setting does not work very well.

Here: https://roscon.ros.org/2015/presentations/robot_localization.pdf (page 4) there is a picture:

This indicates, that I should fuse gmapping and navsat_transform result in one localization node. Should this be done? How should it be done? Is there an example of it?

↧

how to change the transform according to a transform tree

i have a bag file containing a transform tree and want to make a map from gmapping and hector slam. i want to know what all changes i have to make in the transforms in order to get the result..

↧

↧

Extract robot position from rosbag

I recorded tf-messages (while driving the robot) into a bagfile and wrote a ros node that should extract the positions (base_link-frame) from that bag file.

My idea was to use a tf::Transformer and feed it with all the transforms stored in the tf-messages:

rosbag::Bag bag;

bag.open("tf.bag", rosbag::bagmode::Read);

std::vector topics;

topics.push_back("/tf");

rosbag::View view(bag, rosbag::TopicQuery(topics));

tf::Transformer transformer;

BOOST_FOREACH(rosbag::MessageInstance const m, view)

{

//instantiate bag message

tf::tfMessage::ConstPtr tfPtr = m.instantiate();

BOOST_FOREACH(geometry_msgs::TransformStamped const tfs, tfPtr->transforms)

{

tf::StampedTransform stampedTf;

tf::transformStampedMsgToTF(tfs, stampedTf);

//setTransform returns true!

transformer.setTransform(stampedTf);

...

}

}

The method setTransform(...) always returns true, so I thought that it works...

Each time I get a transform with child_frame == /robot_1/base_link I want to get the map-base_link-transform at the time of this last transform. But the transformer returns false:

if(transformer.canTransform(baseLink, mapFrame, stampedTf.stamp_)==true){

//lookup transform

transformer.lookupTransform(baseLink, mapFrame, stampedTf.stamp_, temp);

}

A few additional facts:

The transformer's latest common time is always = 0:

transformer.getLatestCommonTime(baseLink, mapFrame, time, NULL)

And printing all tf-messages of the bag shows that the tf-tree should be consistent (I'm using stage and used view_frames):

/map -> /robot_1/odom -> /robot_1/base_footprint -> /robot_1/base_link -> /robot_1/base_laser_link

Is something wrong with my code or idea?

Any suggestions for a better solution?

Thanks in advance!

↧

How to remove a TF from a ROS bag?

I have a bag that contains a large TF tree where one TF (base_link->virtual_cam) was calculated based on several TFs and parameters. I would like to replay this bag without this generated TF in order to interactively re-generate it as a play the bag.

Hence the question, how do I remove this TF from the bag? Alternatively, can I play the bag without outputting this TF?

Specs: Ubuntu 12.04, Fuerte

↧

tf2 Transform using FPD(NED like) frame return incorrect value

I have a underwater robot that we would like to use Forward, Port, Down orientation. I thought I could just create a link with flipped vertices.

The urdf below displays correctly in rviz

When I create a tf2 listener and try to transform a point from "depth_sensor" to "body-fixed" frames I get the wrong answer.

My script uses a Z of 10 in the depth_sensor frame and returns 5 in the body-fixed frame when I would expect it to return 15

I am obviously missing something.

test.urdf

↧

How to join TF frames in RViz?

I am new to ROS and I am sure my question reflects this.. Thanks in advance for your time.

I am using Ubuntu 14.04 on a Lenovo W530 laptop. I am running ROS indigo that I installed with `apt-get`.

I have a physical robot I am trying to ROSify. I was lucky to be instructed on how to begin this process (https://answers.ros.org/question/271819/please-suggest-all-packages-to-achieve-slam-for-robot/ both robot and how to rosify can be seen there). I was told to first create a URDF file, which I did (see here: https://pastebin.com/Hzy5jpwP). After this objective I focused on the next task as per the post, and created a robot state publisher. This code is a really ugly and I apologize in advance, and I promise I will clean it up soon. You can see it here: https://pastebin.com/NQVzuUD0. Right now as I manually rotate my LMS, RViz shows it move in real time.... it's so cool!

The problem that I have is that I am not able to visualize all my frames in a single RViz session at the same time. I can open RViz and create a TF and RobotModel types and there are no errors and everything looks good. However as soon as I add a LaserScan type I get a TF error `Transform[sender=unknown_publisher] for frame [laser]: Frame [laser] does not exist`:

:

To see my LMS laser scans I followed the second answer in this post (https://answers.ros.org/question/79281/how-to-show-laser-scanner-data-in-rviz/). When I follow the answer I am able to see the laser but the TF and RobotModel in RViz fault out with this message: `Fixed Frame [laser] does not exist`.

Can you please explain how to amalgamate all the frames so that I can see the laser scan, and the TF, RobotModel and LaserScan types all at the same time without any errors? What code do I need to add to my cpp file? If you could please explain with a small code snippet I would really appreciate it.

If I can add any information please let me know.

Thanks in advance.

↧

↧

not getting map in gmapping transform from odom to base_link problem

i m using gmapping and i m not able to transform from odom to base_link. i have the odometry on wheel_odometry topic which has header frame id as "odom" and child frame id as "base_link" further i m using static transform from base_link to laser. i wrote a code to subscribe to wheel_odometry topic and transform it from to odom to base_link and publish it on odomerty topic but still not able to get the map i m sending the code below when i run the code either i get segmentation failed code dumped or else i dont get the transform i m getting the transform in tf tree as map->odom->base_link->laser when the segmentation core is not dumped also i get the error in gmapping as:

[ WARN] [1512827700.862662060, 1509362043.864097101]: MessageFilter [target=odom ]: Dropped 100.00% of messages so far. Please turn the [ros.gmapping.message_notifier] rosconsole logger to DEBUG for more information.

so what is the problem and and how to correct it

code:

#include "ros/ros.h"

#include "nav_msgs/Odometry.h"

#include

void chatterCallback(const nav_msgs::Odometry::ConstPtr& msg)

{

ros::NodeHandle nh;

ros::Publisher odom_pub = nh.advertise("odom", 50);

ros::Rate r(1.0);

while(nh.ok()){

ros::spinOnce();

ros::Time current_time, last_time;

tf::TransformBroadcaster odom_broadcaster;

double x=msg->pose.pose.position.x;

double y = msg->pose.pose.position.y;

double z = msg->pose.pose.position.z;

double a = msg->pose.pose.orientation.x;

double b = msg->pose.pose.orientation.y;

double c = msg->pose.pose.orientation.z;

double d = msg->pose.pose.orientation.w;

double vx = msg->twist.twist.linear.x;

double vy = msg->twist.twist.linear.y;

double vz =msg->twist.twist.angular.z;

current_time = ros::Time::now();

double dt = (ros::Time::now() - last_time).toSec();

double delta_x = (vx * cos(z) - vy * sin(z)) * dt;

double delta_y = (vx * sin(z) + vy * cos(z)) * dt;

double delta_z = vz * dt;

x += delta_x;

y += delta_y;

z += delta_z;

geometry_msgs::Quaternion odom_quat = tf::createQuaternionMsgFromYaw(z);

geometry_msgs::TransformStamped odom_trans;

odom_trans.header.stamp = ros::Time::now();

odom_trans.header.frame_id = "odom";

odom_trans.child_frame_id = "base_link";

odom_trans.transform.translation.x = x;

odom_trans.transform.translation.y = y;

odom_trans.transform.translation.z = z;

odom_trans.transform.rotation.x = a;

odom_trans.transform.rotation.y = b;

odom_trans.transform.rotation.z = c;

odom_trans.transform.rotation.w = d;

odom_broadcaster.sendTransform(odom_trans);

nav_msgs::Odometry odom;

odom.header.stamp = ros::Time::now();

odom.header.frame_id = "odom";

odom.child_frame_id = "base_link";

odom.pose.pose.position.x = x;

odom.pose.pose.position.y = y;

odom.pose.pose.position.z = z;

odom.pose.pose.orientation.x = a;

odom.pose.pose.orientation.y = b;

odom.pose.pose.orientation.z = c;

odom.pose.pose.orientation.w = d;

odom.child_frame_id = "base_link";

odom.twist.twist.linear.x = vx;

odom.twist.twist.linear.y = vy;

odom.twist.twist.angular.z = vz;

last_time = ros::Time::now();

odom_pub.publish(odom);

r.sleep();

}

}

int main(int argc, char** argv)

{

ros::init(argc, argv, "odom_listener");

ros::NodeHandle n;

ros::Subscriber sub = n.subscribe("wheel_odometry", 100, chatterCallback);

ros::spin();

return 0;

}

↧

base_link to imu_link on Turtlebot 3

The Turtlebot3 firmware publishes a transform from base_link to imu_link. My understanding is that this transform is supposed to define where the IMU sensor is mounted relative to the robot's chassis so that the sensor readings can be correctly transformed into the robot's frame frame of reference. If my understanding is correct, then this transform should be static. The IMU frame moves and rotates together with the robot frame, so relative position of IMU frame should not change (presuming that the IMU is not moving within the robot, and on TB3, it is not).

However, looking at the TB3 firmware (https://github.com/ROBOTIS-GIT/OpenCR.git), file ./arduino/opencr_fw/opencr_fw_arduino/src/arduino/libraries/turtlebot3/turtlebot_waffle/turtlebot3_core/turtlebot3_core.ino, I see that the rotation component of the published transform is set to the orientation readings of the sensor.

Hence, the transform is actually the rotation observed by the IMU (and the same value is actually published as orientation in the IMU message, which is supposed to be the sensor reading within the IMU's frame of reference). That does not make sense to me. That means that within the fixed frame of reference, the orientation of the robot is 2x of what it should be (and I can see that clearly happen in the rviz).

Is this a bug and if it's not, does anyone have a meaningful explanation?

↧

Confusing tf transforms

I have been working a lot with transform matrices these days and the tf nomenclature doesn't make things easier.

For tf_echo, the input frames are defined as such:

tf_echo

When using Python and looking up for a transform it is as follows:

lookupTransform(target_frame, source_frame, time) -> position, quaternion

But what I get are two inverse transforms even though I use the same source_frame and target_frame. Why is that?

↧

Rviz viewpoint transform

In the views panel on Rviz, I can see the transform of the viewpoint w.r.t. the fixed frame. Is there a way to get these values from outside Rviz (i.e. by another ROS node)?

↧

↧

How to transform the pose after map merge

Hi everyone!

I am studying on cooperative simultaneous localization and mapping(C-SLAM). I found some tools like [Map Merger Tool](https://github.com/ahhda/Map-Merge-Tool) can help me to merge the maps by using OpenCV.

However, how I can transform the pose of each robot and update the map of each robot, so that they can locate themselves and do some cooperative actions in the merged map? Could TF help in this case?

In my case, I have 1 laptop and 2 robots(1 indigo, 1 jade) with lidar, occupancy maps are generated using GMapping.

↧

How to reflect (or mirror) a point cloud in rviz ?

Hello,

I have successfully set-up ros so that a point cloud is sent to rviz every 100ms. It looks great, however, the visualization is mirrored about the z-y axis.

I have already tried using static_transform_publisher (http://wiki.ros.org/tf#static_transform_publisher), but this only allows me to rotate or translate. Is there a way to reflect/mirror about an axis using static_transform_publisher?

Any help is appreciated.

↧

tf: Adding a frame to transforms from the past

Hi everyone,

I have a question regarding the usage of tf and hope someone can help me answer it.

The problem setup is the following:

Imaging I have a robot with defined base_frame and I send the robots pose transform (in world frame) to tf with a constant rate. Now the robot has a camera rigidly mounted on it. So I define a camera frame with the robots base frame as parent frame and I also send this frame to tf. This way, I can know the cameras pose in the world frame if the robot moves. Assume at time t_0, the robot is at location s_0 and I get an image from the camera, then I do some processing to calculate the pose of a marker in the camera frame. Assume I finish processing the image at time t_K, where the robot moved to s_k. And now I define a marker frame with its parent frame being the camera frame. For this, I first define for the marker its pose transform in the camera frame, and then I send the transform to tf using the API call:

tf::TransformBroadcaster br;

br.sentTransform(tf::StampedTransform(markerTransform, t_0, "camera_frame_tf", "marker_frame_tf"));

I stamp this marker frame tranform with time t_0 instead of the current time t_k, because the image is taken at time t_0 and the calculated distance from marker to camera should be superposed with the robots/camera position at t_0. So using the above br.sendTransform callback, will the marker frame be superposed correctly to tf from time t_0?

Also at current time t_k, how do I get the markers pose w.r.t. the world frame back at time t_0?

Any clue will be appreciated!

Many thanks in advance.

↧